Pricing

About Gavel

Careers

Product Wishlist

Subdomain Log In

Manage Account

Can ChatGPT redline contracts accurately, and can you ethically use it? We break down what it can and can’t do, and why general AI falls short for legal work. Explore the risks of hallucinations, legal nuance gaps, and lack of integration, plus how legal-specific AI tools like Gavel Exec fill in the gaps to support contract review and negotiation.

Easy intake and document automation to auto-populate your templates.

Generative AI has entered the legal mainstream. Lawyers are experimenting with tools like ChatGPT to accelerate contract redlining, drafting, and review. But can you legally and ethically rely on ChatGPT for this type of work?

Short answer: Yes, but only if you stay in full control. Legal professionals must supervise every AI-generated output, protect client confidentiality, and ensure compliance with ethical rules that govern the profession. Using ChatGPT for redlining without these safeguards can expose you to significant risks, both professional and legal.

This article explains how ChatGPT fits into legal workflows, where it helps, where it doesn’t, and what regulations and ethical standards you must follow. It also explores how purpose-built tools like Gavel Exec help legal professionals get the benefits of AI while minimizing the risks and complying with bar requirements.

What ChatGPT Can Actually Do in Contract Redlining

ChatGPT, as a large language model, is good at:

Think of it like a hyper-efficient intern, it can generate text quickly and reasonably well, but it doesn’t know what matters in your deal or what’s acceptable to your client. That distinction is critical, and ethically consequential.

Ethical and Legal Requirements for Using AI in Legal Work

State bars and ethics committees have begun to weigh in on how tools like ChatGPT can be used in practice. Here’s a summary of where things stand:

Bottom line: Lawyers can use generative AI, but only if they ensure accuracy, protect confidential information, and disclose usage appropriately where necessary. The burden is on the lawyer, not the AI provider, to comply with professional standards.

Where ChatGPT Falls Short, and Why That Matters Ethically

ChatGPT doesn’t understand your client’s objectives, deal dynamics, or jurisdictional requirements. It predicts text that sounds right but doesn’t evaluate legal impact. It may:

Ethical Implication: Under Rule 1.1 (Competence), you must supervise any AI output and ensure it meets the legal and strategic needs of the matter.

2. 🧠 Hallucination Risk and Factual Inaccuracy

ChatGPT is known to "hallucinate", fabricating legal clauses, case citations, or regulatory references. In the Mata v. Avianca case, lawyers submitted AI-generated cases that didn’t exist. That’s not just embarrassing, it led to sanctions.

Ethical Implication: Rule 3.3 (Candor to the Tribunal) and Rule 4.1 (Truthfulness) prohibit false statements, even if generated by AI. You’re responsible for verifying all facts and legal authority the AI presents.

3. 🔒 Client Confidentiality Violations

The public version of ChatGPT operates on shared infrastructure and may retain user inputs for training unless certain settings are enabled. Inputting confidential client data into such a system may:

Risk mitigation: Only use platforms that offer Zero Data Retention, encrypt all data, and sign data protection agreements. Many general AI tools (including ChatGPT) do not meet this bar out-of-the-box.

4. 🚫 No Awareness of Firm Playbooks or Negotiation Strategy

ChatGPT doesn’t know your fallback positions, clause libraries, or preferred language. It won’t know:

Ethical Implication: Rule 1.1 and Rule 1.3 (Diligence) require you to apply your firm’s legal standards and client preferences. If AI output contradicts your policies, and you don’t catch it, you may compromise your client’s interests.

If AI saves time on document review, how should you bill for that work? Ethically, you must:

Billing transparency is governed by Rule 1.5 (Fees) and Rule 7.1 (Communication About Services). Some bar opinions suggest that disclosure may also be needed under Rule 5.3, since generative AI is considered nonlawyer assistance.

Why Human Oversight Is Legally and Ethically Required

Even if AI does 90% of the redline, you are responsible for 100% of it. That’s not just good practice, it’s what ethics rules demand.

Every AI-generated edit must be:

You can’t delegate legal judgment to an algorithm. You can’t assume the AI “knows what it’s doing.” And if something goes wrong, “the software told me to” is not a defense.

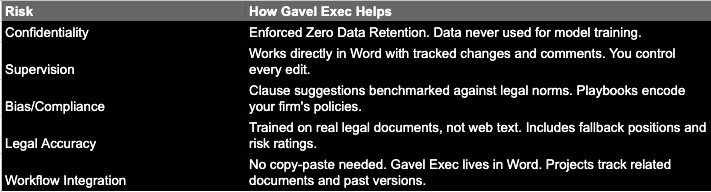

What Legal-Specific AI Tools Like Gavel Exec Do Differently

To address these risks, some tools have been designed specifically for lawyers, tools like Gavel Exec.

Unlike general-purpose AI, Gavel Exec complies with ethical and practical requirements out of the box:

If you're considering AI tools like ChatGPT for legal work, follow these minimum best practices:

DO:

DON’T:

Final Thoughts: ChatGPT is a Tool, Not a Legal Colleague

ChatGPT can be helpful for brainstorming, rewriting, and flagging surface-level issues. But it cannot replace legal expertise, ethical responsibility, or judgment.

Tools like Gavel Exec go further, integrating legal workflows, protecting client data, enforcing your playbook, and making AI a real asset to your team. But even with legal-specific AI, you must stay in the driver’s seat. Ethics rules don’t change just because the technology does.

Use AI. Use it smartly. And above all, use it ethically.

Try Gavel Exec on a free trial here (no credit card required).

Contract AI for renewable energy lawyers accelerates review of PPAs, EPC contracts, interconnection agreements, and project finance documents. Energy-focused AI like Gavel Exec helps identify bankability issues, flag non-standard risk allocation, and produce clean redlines and negotiation memos without replacing legal judgment.

Evaluating GC AI for contract review. This guide breaks down its strengths and gaps, then explains why Gavel Exec is the strongest alternative for in-house counsel who need accurate, Word-native contract review that aligns with commercial and privacy requirements.

Government and public sector contracts require strict compliance with FAR and agency-specific regulations. This guide explains how AI contract review supports government contracting and why Gavel Exec is the leading specialized tool for FAR clauses, indemnity limitations, and mandatory flow-down requirements.